At SHALB we build infrastructures and platforms. An infrastructure is a set of components that are used to launch workloads.The components could be networks, DNS servers, container orchestrators, databases, functions, policies, etc.

Under a platform we mean some reference infrastructure patterns. We design them for SRE and Product teams so that they could launch infrastructures from those examples.

Building DevOps Platforms

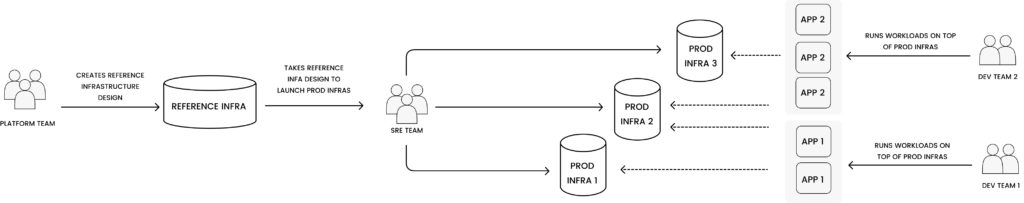

So the platform delivery looks like this:

To follow this approach, the infrastructures should be 100% described as code. In this case you will be able to apply, test and propagate changes to all dependent infrastructures. Suppose we want to deliver an infrastructure using a platform approach. Let’s take a sample platform and see what our workflow would look like.

Our platform will be:

AWS

terraform/ /vpc - module that defines network segmentation in the cloud /dns - module that creates DNS records with Route 53 /eks - module that launches managed Kuberentes cluster kubernetes/ /ingress-nginx - manifests that bring the Ingress controller on EKS. helm/ /argocd - ArgoCD - deployment controller /externaldns - K8s controller to update DNS records env/ /dev - infrastructure values and sequence of infrastructure launching (tf files, Bash scripts, README's) /stage - another version of infrastructure

Even if we store this in Git and have a pretty decent runbook, we face the following challenges:

- – How to fully automate infrastructure deployments

- – How to implement continuous infrastructure testing

- – How to enable propagation of infrastructure changes across different environments

The root cause of the problem is that infrastructure code contains different technologies, like: Terraform, Helm, K8s manifests, Bash scripts, etc. This makes it hard and sometimes even impossible to couple them together.

We can address these issues with cluster.dev.

Abstractions

Cluster.dev has a few abstractions that help solve the problems of platform delivery.

1. Unit.

Like a basic lego brick, a unit is responsible for passing variables to a particular technology, for example: Terraform Module, Helm chart or Bash script. By executing a unit you get the outputs.

2. Stack Template.

A set of different units, coupled together with a common templating engine. Stack Template defines infrastructure patterns or even the whole platform.

3. Stack.

A set of variables and secrets that will be applied to a Stack Template and executed. After the Stack execution we will be ready to use infrastructure objects.

4. Project.

A house for multiple Stacks that can share global variables and exchange outputs. All Stacks in Project can be executed in parallel and have dependencies.

5. Backend.

Like the Terraform backend, defines where to store the state for execution. Could be local or using a cloud object storage.

6. Generators.

As part of a Stack Template, you can script dialogues for end users where they could populate Stack values in an interactive mode.

Workflow

Now, let’s get back to our platform and check the workflow.

Platform team -> creates TF modules, Helm charts, etc. The code is hosted in monorepo or in multiple repos.

Platform team -> assembles infrastructure pattern as a Stack Template and keeps it in Git repo, able to versionate, tag, etc.

SRE/Product team -> Declares values for the Stack, picks the version of the Stack Template by Git URL and executes a one-shot command: `cdev apply` that will create or update the target infrastructure/s.

Alternatives

So, what other options could be an alternative to this approach?

- Define everything in Terraform. Looks fine, but has a few downsides. Sometimes you need to do several `terraform apply`, which dismisses the idea to run this as a one shot. Also, you have to keep Helm charts values or raw Kubernetes manifests converted to HCL code, which is a fairly complex and useless task.

- Pulumi. A great option if your platform team is good at programming on TypeScript or .NET. The downside of Pulumi is that you need to rewrite or convert all Terraform modules that you use, and maintain them on your own.

- Crossplane. Basically a good option if you are a heavy Kubernetes user, because to run things you need to launch an operational cluster. Also, you need to have a common templating to your manifests or use Helm for this. Note that the number of objects supported is smaller than in Terraform.

- CDK. There is an AWS CDK, Terraform CDK, Kubernetes CDK. So you’ll have to rewrite the existing code and maintain it.

Schedule call

Schedule call